Meredith Whittaker is the new president of the Signal Foundation.

Meredith Whittaker's biography makes for some pretty impressive reading. As the founder of Google's Open Research program, she acted as something of an interface tween the software manufacturer and the academic world. A wider public got note of her in 2017, when she was one of the main organizers of the "Google Walkouts" against sexual harassment and discrimination that were to make waves in the tech world far beyond Google. But for Whittaker herself, it also marked a break with her longtime employer, which she left in 2019.

At the same time, she is also the founder and professor of New York University's AI Now Institute – making her a proven expert on the topic of artificial intelligence, especially the ethical issues that come with it. And then she also acted as an advisor to the White House, the EU Parliament, as well as the U.S. Trade Commission FTC.

Signal calls

Now she is heading for another station in her career: Whittaker takes on the role of president of the Signal Foundation, which supports the development of the encrypted messenger Signal. In an interview with DER STANDARD, she explains, among other things, what she thinks of plans to soften encryption, why it is wrong to talk about "online hate" in a detached way, and why the "surveillance business model" is one of the central problems on the Internet. Naturally AI and why it's fundamentally flawed is another of the topics discussed.

STANDARD: Right now a lot of countries are pushing for new laws to weaken end-to-end encryption – or sidestep it. The UK already has draft legislation which would force messengers to scan for child sexual abuse material (CSAM). The EU is actively discussing similar measures and Apple already has shown such a system. So let me play devil's advocate here: All of them argue with child protection. What's wrong with that?

Whittaker: This is a very popular variety of magical thinking, and it seems to emerge like mushrooms after rain every few years. But the truth is always the same, there's no spell you can cast to break encryption for one purpose and not break it for every other purpose. Either it is robust, either it works or it's broken. And we unequivocally stand by strong encryption, there's no negotiating on that point.

STANDARD: But how to oppose this? I mean, it's a very possible reality that those countries could form such laws. So you'll have to handle this in some way...

Whittaker: I'm not in Signal yet, so I have not started the process of creating a policy agenda. I'm going to be forming that once I'm inside and have all of the information at hand. I don't have a good answer to that right now, sorry. Because that needs a really precise answer. And you're going to need some careful thinking that will be in step with the sort of dynamism of these kinds of issues.

"There's no spell you can cast to break encryption for one purpose and not break it for every other purpose"

STANDARD: All of that ties into another big topic: platform moderation. Right now – at least in Europe – Telegram is getting a lot of criticism for – also – being the platform used by Covid deniers and far right groups to spread hate – and organize their actions. Let's hypothetically say Telegram gets blocked or starts moderating themselves more actively and many of those groups move to Signal. How would you react?

Whittaker: There's a lot of features Telegram has and Signal doesn't. Signal is not a social network. It is not an algorithmically amplified content platform. It is a messaging service.

STANDARD: So what you are saying is, that you consciously choose not to develop certain features – like Telegram channels – to not even get into such a situation?

Whittaker: Absolutely. The team is extremely smart and does a lot of thinking, you know, before any feature is decided on. We keep all kinds of variables in mind from the platform or operating system we're deploying on to the norms and expectations of the people who use Signal to the sort of overall policy environment into which a given feature will be launched.

STANDARD: All of that ties into a general discussion about platform security and privacy on the one hand and the fight against harassment and hate on the other side. Is it even possible – or desirable – to have a perfectly private software, where groups of up to 100 people could also organize for harassment and violence?

Whittaker: I would push back on the framing, frankly. Discrimination and harassment are not products of digital technologies. They are products of skewed social cultures. They are products of uncomfortable and bad histories. They are products of the societies in which we live.

They manifest through various forms of communication and action and the structures of our institutions. But they don't spring from digital communications or digital technologies, and they're not going to be solved by tweaking digital technologies without looking at the root issues. Which it's not the technology, it's the overall social structure in which we live.

"Discrimination and harassment are not products of digital technologies. They are products of skewed social cultures."

STANDARD: The recently introduced Digital Markets Act in the EU asks for messenger interoperability while Signal has openly opposed this idea. Historically Open Source projects similar to yours strongly favored having a multitude of clients. So what has changed?

Whittaker: I don't know that anything has changed for Signal. I think there's a difference between the DMAs definition of a social platform and their terms of interoperability and the broader goal of ensuring that different technologies can interact with each other such that you don't have a market monopolization.

Speaking for Signal, I can say our privacy bar is very high and we're not going to compromise that – full stop. So currently, if we were to interoperate with, say, iMessage or Whatsapp, we would have to lower our privacy bar for our users and everyone else. So we're not going to adapt to somebody else's privacy bar just to fulfill an interoperability goal.

STANDARD: Would you say this is a good example for misguided regulation? In the sense that it's well-meant but maybe not fully thought through? If Signal gets big enough, you will also have to do this, you will have to be interoperable with other clients...

Whittaker: I think that I understand the spirit of the regulation. And when Signal does get big enough, again, we're happy to talk interoperability without sacrificing our privacy bar. If other large services wanted to interoperate and provide the people who use them with that level of privacy, that is a huge success for us. We are driven by a mission to provide that privacy, and we're pretty agnostic as to whether they're using something called Signal or something called a service that operates with Signal on the same terms. But the variable that is important to us is maintaining that privacy bar.

STANDARD: You're also a senior advisor to the chair at the U.S. Federal Trade Commission (FTC), which plays a central role in regulating big tech. So how would you generally classify the progress in regulating technology?

Whittaker: I actually just ended my term and it was a very eye-opening experience. I'm cautiously optimistic that policymakers and lawmakers and others outside of the industry are starting to understand both the technical specifics of how technology actually works and the material basis for the business model. So the mystified narrative that painted computational technology from specific tech companies as synonymous with scientific progress and innovation has been dismantled – that's very positive over the past five or so years.

I think the way that the network effects of the surveillance business model have created monopolies out of a handful of companies, the way that data flows, the way that these sort of business models lock people in in a certain way, all of that is becoming clearer. And I would say that after about 15 years where it was very difficult to have a conversation with anyone in government or policy about technology without them sort of valorizing it, or assuming that data would save us all or wanting the government to run like Google or all of these perspectives that were very untethered from reality and that were very much informed by lobbyists and others from these tech companies.

STANDARD: But do you see real progress happening? In general governments and regulators are very slow moving and so far big tech mostly seems to have evaded any real consequences while those new laws often have unintended side-effects for smaller players...

Whittaker: I think a lot of substantial things have happened. GDPR was substantial. Does it solve the entire problem? No. Was it kind of messy? Absolutely right. But there have been moves toward more informed regulatory approaches. And look, I'm not a wizard. I don't know exactly what's going to happen.

I've never believed, nor do I believe now, that the totality of the solution to the extremely troubling and complex problems that big tech hegemony faces us with is solved with regulation. It's going to take a lot more. I can say from my personal experience over almost 20 years that it is much easier to have a conversation with these folks that is based in reality than it was even five years ago, let alone ten years ago.

"GDPR was substantial. Does it solve the entire problem? No. Was it kind of messy? Absolutely right. But there have been moves toward more informed regulatory approaches."

STANDARD: So let me just give you a little bit of a cynical view of the tech world right now...

Whittaker: Never heard it... [laughs]

STANDARD: Just tell me if I'm wrong. Right now the tech world basically looks like this: We have big tech on one side, startups who want to be bought by big tech on the other and a few niche players for privacy conscious users on the edges. Do you think that there's a real chance for disruption? Or will privacy stay something for a niche of very well informed users?

Whittaker: I don't disagree with your analysis. It's pretty clear that the industry has sort of shaped into a natural monopoly via the network effects of the surveillance business model. Full stop. That's not contestable. But look at the Google Play Store and you can see that Signal has been downloaded over 100 million times – and that's just one place.

I think there is a desire for safe communication, people get that it's necessary. And the desire for Signal is only growing. So I'm really optimistic about Signals prospects, I wouldn't be putting all of my time into this if I weren't.

STANDARD: In more general terms: How do you see the role of a comparatively small player like Signal in a world ever more dominated by big tech?

Whittaker: The environment in which Signal operates, was built by a tech business model that Signal rejects: the surveillance business model. It is more and more necessary that a player like Signal exists, even though it can be challenging to operate in that environment.

Developing high availability software is very expensive, so ensuring that we have a model for sustainability is important. Most efforts fund their development and maintenance through some variety of the surveillance business model and Signal rejects that. But that doesn't mean it's any cheaper for us. It costs us tens of millions of dollars a year to develop and maintain and care for Signal. And that cost is never going away. It also goes up relative to the number of people who use Signal.

I also want to emphasize that that tens of millions of dollars a year is a very small budget compared to our competition. WhatsApp has over a thousand engineers – and that's just engineers. Telegram has over 500 employees and they raised billions of dollars through a cryptocurrency scheme.

Signal in comparison is extremely competent but also fairly small. It's a total of around 40 people. And that is everyone at Signal. That is designers, that is the engineers, that is the product managers. That's the people who do support and help the people who rely on Signal to use it.

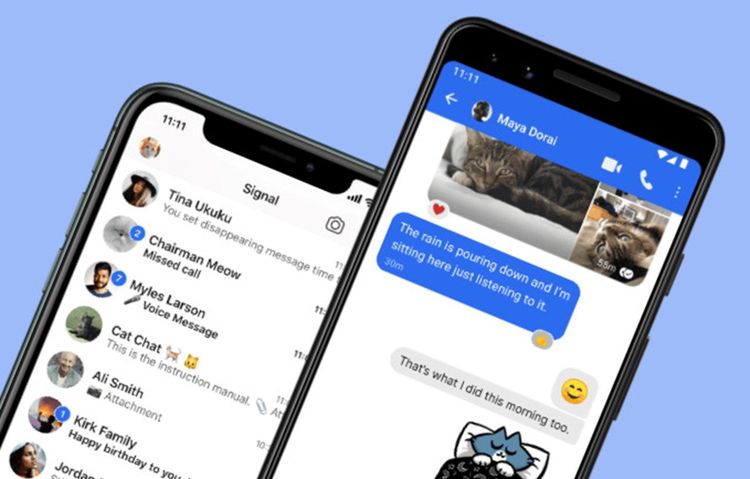

And because of the ecology in which Signal exists, Signal needs to effectively develop three separate clients or products. You need to develop one for the iOS operating system, one for Android, one for desktop. Then you have to harmonize all of those under one umbrella, make sure they talk to each other, make sure that they look and feel the same. You then need to pay for hosting and registration – which are very expensive and which are non-negotiable.

"The environment in which Signal operates, was built by a tech business model that Signal rejects: the surveillance business model."

STANDARD: Right now you are still heavily relying on the donations by [WhatsApp founder and former Signal President] Brian Acton. This doesn't sound like a very sustainable plan for a long term future. I guess advertising is out of the question?

Whittaker: We won't participate in the surveillance business model and we're really grateful to Brian for providing the support that lets us build on that foundation. We're just starting efforts to build a model that relies on the many millions of people who use Signal contributing a little bit so that Signal can continue to grow and thrive even in an environment that is shaped by the surveillance business model.

STANDARD: Do you think that donations alone are going to be enough to secure the financial sustainability of Signal?

Whittaker: That is the road that we are trying now. You may have seen badges, which are sort of a little icon that lets people know that you're supporting Signal. And we're already doing pretty well there. I don't have numbers to share, but so far we haven't done much work to make people aware that we are looking for donations – and still people seem to have responded really well. I think in the case of Signal, the people who use Signal and increasingly the public at large understand the need for a place for safe and intimate communications outside of the surveillance apparatus of corporations and government.

STANDARD: You also have been experimenting with crypto payments...

Whittaker: We have an option to take crypto payments. Yeah.

STANDARD: Crypto has been getting a lot of criticism, especially lately. So is this something to reconsider?

Whittaker: I'm not inside at this point, so I don't have a decisive answer on that. I think the Mobilecoin integration is very experimental and Signal's core function is private messaging, and that's what I'm committed to.

STANDARD: So you think that Signal should focus on the core private messaging stuff and not try to add ever more features?

Whittaker: If a feature is necessary because the norms and expectations around communication require it, then I think it is great to add that feature. But we are lucky because we are not driven by quarterly earnings reports and shareholder expectations. We don't have to continue to pretend to innovate, continue to add features no one wants. To look like Microsoft Office at the end of the day. We get to really focus on the fundamentals and make sure it's working, make sure it is robust.

And then think about: have we done that? Are we in a position where we can continue to support our core offering – which is safe and private communications – and branch out in a way that won't overstretch us, that won't stress out the workers who are doing that work day in and day out, that won't sort of create a bloated organization that isn't healthy.

So we're going to do the thing we do as well as possible. And when that is a well-oiled machine – and only then – will we think about doing other things.

"We don't have to continue to pretend to innovate, continue to add features no one wants. To look like Microsoft Office at the end of the day."

STANDARD: You are also a very well known AI expert – and critic. Recently you called AI a "technique of the powerful". How to change that? Is a progressive use of AI possible and what would that look like?

Whittaker: Another thing I've called AI is a surveillance derivative. The changes we've seen over the past decade with regard to AI are not due to scientific advances in algorithmic models or AI techniques. They are due to the concentrated data resources and the computational infrastructure that tech companies have access to. So those are the ingredients that led to the resurgence of AI over the past decade.

AI is a 70 year old field. The reason it's popular now is because it allows these companies to make more profits using the surveillance data they have and to apply the AI models they build with this data into new markets that expand their growth and reach.

STANDARD: Companies like Apple and Google are increasingly using machine learning to do complex features locally on a smartphone. Which also means your data doesn't have to be uploaded to some server – and analyzed there. So isn't this an example of a "good", privacy-preserving usage of AI?

Whittaker: I think we have to be really careful about how we define privacy. I was around when some of the people at Google were thinking of using differential privacy techniques to do on-device-learning, which is what they call that. We train the model, then we sub-train it on your device and then send those learnings up to our servers. Then we have access to those – but not your data – and we're able to say it's private.

But the insights from that data are what's valuable about the data. The ability for that data to be squished into an AI model and to apply that model back on us to make decisions and predictions and classifications about us. That produces extremely intimate data in many cases. You can say that based on the shape of Meredith's face, she is likely to be X, Y or Z – a shoplifter, a bad worker, whatever it is. That doesn't need to be true to have serious effects on my life.

So I don't know that we can blithely say that something is private if it is in fact giving these companies or whoever controls that infrastructure the ability to make decisions and make labels about us that have that significant effect – and that we usually don't know about and can't contest.

STANDARD: So is there even the possibility of a "good" usage of AI?

Whittaker: Hypothetically? Of course. But I think we need to get back to the first observation. The AI we're talking about now is a product of concentrated surveillance data and infrastructure. So in reality, it's the folks who have the ability to access both of those – which is a handful of companies – that are going to be able to create the AI. And thus the AI will be created within a structure that incentivizes certain uses and disincentivizes others.

I have a paper I wrote on this recently that gets into some more of my thinking around this. It's called "The Steep Cost of Capture". It goes through some of this history and some of these dynamics. I think understanding the material basis for AI is really important to understanding that, yes, we have many hypothetical good uses, but ultimately, like if we don't look at sort of the power structures of who gets to create them and the business model by which AI is created, we're not going to understand why those good uses are so few and far between.

STANDARD: Coming back to your new role as the president of the Signal Foundation: How would you describe your assignment?

Whittaker: At a high level, I'm going to be guiding strategy. I'm going to be building policy awareness carefully and cautiously in time with the team. I'm going to be working on those questions of long term sustainability. It's imperative that we create models that don't rely on the surveillance business model and that nonetheless can support high availability software. And I'm going to be ensuring that as an organization, we're communicating clearly.

STANDARD: I guess part of your job is also signaling (no pun intended) trust to the users, by you being who you are – an outspoken critic of big tech...

Whittaker: I have always been willing to speak up and oppose powerful people when I think it's important. But the trust that people place in Signal is in part because the Signal protocol has been so carefully and robustly designed and reviewed by the security community. Maintaining the ties to that community and ensuring that every step we take has that much care and precision is going to be important.

(Andreas Proschofsky, 6.9.2022)